I’m a retired Unix admin. It was my job from the early '90s until the mid '10s. I’ve kept somewhat current ever since by running various machines at home. So far I’ve managed to avoid using Docker at home even though I have a decent understanding of how it works - I stopped being a sysadmin in the mid '10s, I still worked for a technology company and did plenty of “interesting” reading and training.

It seems that more and more stuff that I want to run at home is being delivered as Docker-first and I have to really go out of my way to find a non-Docker install.

I’m thinking it’s no longer a fad and I should invest some time getting comfortable with it?

I would absolutely look into it. Many years ago when Docker emerged, I did not understand it and called it “Hipster shit”. But also a lot of people around me who used Docker at that time did not understand it either. Some lost data, some had servicec that stopped working and they had no idea how to fix it.

Years passed and Containers stayed, so I started to have a closer look at it, tried to understand it. Understand what you can do with it and what you can not. As others here said, I also had to learn how to troubleshoot, because stuff now runs inside a container and you don´t just copy a new binary or library into a container to try to fix something.

Today, my homelab runs 50 Containers and I am not looking back. When I rebuild my Homelab this year, I went full Docker. The most important reason for me was: Every application I run dockerized is predictable and isolated from the others (from the binary side, network side is another story). The issues I had earlier with my Homelab when running everything directly in the Box in Linux is having problems when let´s say one application needs PHP 8.x and another, older one still only runs with PHP 7.x. Or multiple applications have a dependency of a specific library when after updating it, one app works, the other doesn´t anymore because it would need an update too. Running an apt upgrade was always a very exciting moment… and not in a good way. With Docker I do not have these problems. I can update each container on its own. If something breaks in one Container, it does not affect the others.

Another big plus is the Backups you can do. I back up every docker-compose + data for each container with Kopia. Since barely anything is installed in Linux directly, I can spin up a VM, restore my Backups withi Kopia and start all containers again to test my Backup strategy. Stuff just works. No fiddling with the Linux system itself adjusting tons of Config files, installing hundreds of packages to get all my services up and running again when I have a hardware failure.

I really started to love Docker, especially in my Homelab.

Oh, and you would think you have a big resource usage when everything is containerized? My 50 Containers right now consume less than 6 GB of RAM and I run stuff like Jellyfin, Pi-Hole, Homeassistant, Mosquitto, multiple Kopia instances, multiple Traefik Instances with Crowdsec, Logitech Mediaserver, Tandoor, Zabbix and a lot of other things.

It seems like docker would be heavy on resources since it installs & runs everything (mysql, nginx, etc.) numerous times (once for each container), instead of once globally. Is that wrong?

You would think so, yes. But to my surprise, my well over 60 Containers so far consume less than 7 GB of RAM, according to htop. Also, of course Containers can network and share services. For external access for example I run only one instance of traefik. Or one COTURN for Nextcloud and Synapse.

The backup and easy set up on other servers is not necessarily super useful for a homelab but a huge selling point for the enterprise level. You can make a VM template of your host with docker set up in it, with your Compose definitions but no actual data. Then spin up as many of those as you want and they’ll just download what they need to run the images. Copying VMs with all the images in them takes much longer.

And regarding the memory footprint, you can get that even lower using podman because it’s daemonless. But it is a little more work to set things up to auto start because you have to manually put it into systemd. But still a great option and it also works in Windows and is able to parse Compose configs too. Just running Docker Desktop in windows takes up like 1.5GB of memory for me. But I still prefer it because it has some convenient features.

I’m a VMware and Windows admin in my work life. I don’t have extensive knowledge of Linux but I have been running Raspberry Pis at home. I can’t remember why but I started to migrate away from installed applications to docker. It simplifies the process should I need to reload the OS or even migrate to a new Pi. I use a single docker-compose file that I just need to copy to the new Pi and then run to get my apps back up and running.

linuxserver.io make some good images and have example configs for docker-compose

If you want to have a play just install something basic, like Pihole.

dude, im kinda you. i just jumped into docker over the summer… feel stupid not doing it sooner. there is just so much pre-created content, tutorials, you name it. its very mature.

i spent a weekend containering all my home services… totally worth it and easy as pi[hole] in a container!.

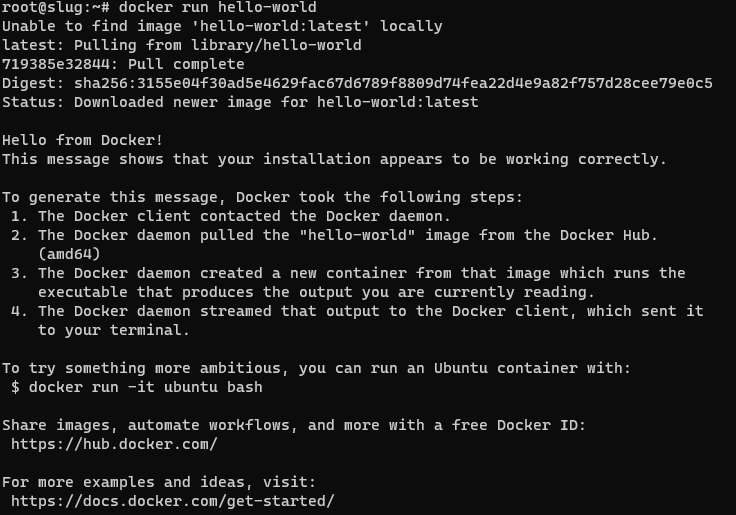

Well, that wasn’t a huge investment :-) I’m in…

I understand I’ve got LOTS to learn. I think I’ll start by installing something new that I’m looking at with docker and get comfortable with something my users (family…) are not yet relying on.

Forget docker run,

docker compose up -dis the command you need on a server. Get familiar with a UI, it makes your life much easier at the beginning: portainer or yacht in the browser, lazy-docker in the terminal.dockge is amazing for people that see the value in a gui but want it to stay the hell out of the way. https://github.com/louislam/dockge lets you use compose without trapping your stuff in stacks like portainer does. You decide you don’t like dockge, you just go back to cli and do your docker compose up -d --force-recreate .

# docker compose up -d no configuration file provided: not foundyou need to create a docker-compose.yml file. I tend to put everything in one dir per container so I just have to move the dir around somewhere else if I want to move that container to a different machine. Here’s an example I use for picard with examples of nfs mounts and local bind mounts with relative paths to the directory the docker-compose.yml is in. you basically just put this in a directory, create the local bind mount dirs in that same directory and adjust YOURPASS and the mounts/nfs shares and it will keep working everywhere you move the directory as long as it has docker and an available package in the architecture of the system.

`version: ‘3’ services: picard: image: mikenye/picard:latest container_name: picard environment: KEEP_APP_RUNNING: 1 VNC_PASSWORD: YOURPASS GROUP_ID: 100 USER_ID: 1000 TZ: “UTC” ports: - “5810:5800” volumes: - ./picard:/config:rw - dlbooks:/downloads:rw - cleanedaudiobooks:/cleaned:rw restart: always volumes: dlbooks: driver_opts: type: “nfs” o: “addr=NFSSERVERIP,nolock,soft” device: “:NFSPATH”

cleanedaudiobooks: driver_opts: type: “nfs” o: “addr=NFSSERVERIP,nolock,soft” device: “:OTHER NFSPATH” `

If you have homelab and not using containers - you are missing out A LOT! Docker-compose is beautiful thing for homelab. <3

It took me a while to convert to Docker, I was used to installing packages for all my Usenet and media apps, along with my webserver. I tried Docker here and there but always had a random issue pop up where one container would lose contact with the other, even though they were in the same subnet. Since most containers only contain the bare minimum, it was hard to troubleshoot connectivity issues. Frustrated, I would just go back to native apps.

About a year or so ago, I finally sat down and messed around with it a lot, and then wrote a compose file for everything. I’ve been gradually expanding upon it and it’s awesome to have my full stack setup with like 20 containers and their configs, along with an SSL secured reverse proxy in like 5-10 minutes! I have since broken out the compose file into multiple smaller files and wrote a shell script to set up the necessary stuff and then loop through all the compose files, so now all it takes is the execution of one command instead of a few hours of manual configuration!

It just making things easier and cleaner. When you remove a container, you know there is no leftover except mounted volumes. I like it.

It’s also way easier if you need to migrate to another machine for any reason.

I use LXC for all the reasons most people use Docker, it’s easy to spin up a new service, there are no leftovers when I remove a service, and everything stays separate. What I really like about LXC though is that you can treat containers like VMs, you start it up, attach and install all your software as if it were a real machine. No extra tech to learn.

Removed by mod

The main downside of docker images is app developers don’t tend to play a lot of attention to the images that they produce beyond shipping their app. While software installed via your distribution benefits from meticulous scrutiny of security teams making sure security issues are fixed in a timely fashion, those fixes rarely trickle down the chain of images that your container ultimately depends on. While your distributions package manager sets up a cron job to install fixes from the security channel automatically, with Docker you are back to keeping track of this by yourself, hoping that the app developer takes this serious enough to supply new images in a timely fashion. This multies by number of images, so you are always only as secure as the least well maintained image.

Most images, including latest, are piss pour quality from a security standpoint. Because of that, professionals do not tend to grab “off the shelve” images from random sources of the internet. If they do, they pay extra attention to ensure that these containers run in sufficient isolated environment.

Self hosting communities do not often pay attention to this. You’ll have to decide for yourself how relevant this is for you.

Don’t learn Docker, learn containers. Docker is merely one of the first runtimes, and a rather shit one at that (it’s a bunch of half-baked projects - container signing as one major example).

Learn Kubernetes, k3s is probably a good place to start. Docker-compose is simply a proprietary and poorly designed version of it. If you know Kubernetes, you’ll quickly be able to pick up docker-compose if you ever need to.

You can use

buildah bud(part of the Podman ecosystem) to build containerfiles (exactly the same thing as dockerfiles without the trademark). Buildah can also be used without containerfiles (your containerfiles simply becomes a script in the language of your choice - e.g. bash), which is far more versatile. Speaking of Podman, if you want to keep things really simple you can manually create a bunch of containers in a pod and then ask Podman to create a set of systemd units for you. Podman supports nearly all of what docker does (with exception to docker’s bjorked signing) and has identical command line syntax. Podman can also host a docker-compatible socket if you need to use it with something that really wants docker.I’m personally a big fan of Podman, but I’m also a fan of anything that isn’t Docker: LXD is another popular runtime, and containerd is (IIRC) the runtime underpinning docker. There’s also firecracker or kubevirt, which go full circle and let you manage tiny VMs like containers.

All that makes sense - except that I’m taking about 1or 2 physical servers at home and my only real motivation for looking into containers at all is that some software I’ve wanted to install recently has shipped as docker compose scripts. If I’m going to ignore their packaging anyway, and massage them into some other container management system, I would be happier just running them of bare metal like I’ve done with everything else forever.

There are teachings I have read/ discovered through YouTube (can’t remember exactly where) about the reasons and the philosophy behind moving to docker, or having it as a state machine.

Have you considered looking into dockers alternatives, also ?

Here is 1 of the sources that may give you insights:

https://www.cloudzero.com/blog/docker-alternatives/

– There has been some concerns over docker’s licensing and, as such, some people have started preferring solutions such as podman and containerd.

Both are good in terms of compatibility and usability, however I have not used them extensively.

Nonetheless, I am currently using docker for my own hyperserver [Edit2: oops, I meant hypervisor ✓, not hyperserver] purposes. And I am also a little concerned about the future of docker, and would consider changing sometime in the future.

[Edit1: I am using docker because it is easy to make custom machines, with all files configurations, and deploy them that way. It is a time saver. But performance wise, I would not recommend it for major machines that contain major machine processes and services. And that’s just the gist of it].

Nixos, nixos, nixos 🤌

Does Docker still give a security benefit over NixOS, because of the sandboxing?

Not familiar with nixOS but there’s probably still isolation benefits to Docker. If you care a lot about security, make sure Docker is running in rootless mode.

See this comment https://sh.itjust.works/comment/6651651

Both! Sandboxing from containers and configuration control from nix go well together!

You can use the sandboxing of nixos

You get better performance, nixos level reproducibility, and it’s not docker which is not foss and running with root

The Nix daemon itself still uses root at build/install time for now. NixOS doesn’t have any built-in sandboxing for running applications à la Docker, though it does have AppArmor support. But then, NixOS doesn’t generally have applications run as root (containerized or otherwise), unlike Docker.

You don’t need to build/install with root, you can do home-manager

And for isolation there’s one good module, I forgot its name

And if just easier but less reproducible, you can do the containers, but with nixos’ podman, and this is of course builtin

I’m not sure honestly if we are agreeing or disagree lol

Nix for building OCI containers is great and Nixos seems like a great base system too. It seems like a natural step to take that and use it to define our a k8s system in the future as well.

I’m currently doing that with OpenTofu (Terraforms opensource successor) and Ansible but I feel like replacing those with nix may provide a real completeness to the codification of the OS.

Barring k8s though, at least until it’s gets so simply you might as well use it, podman is so far the go to way to run containers instead of Docker (for both of the reasons you mentioned!). That and flatpaks for GUI apps because of the portals system!

Docker is amazing, you are late to the party :)

It’s not a fad, it’s old tech now.

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I’ve seen in this thread:

Fewer Letters More Letters DNS Domain Name Service/System Git Popular version control system, primarily for code HTTP Hypertext Transfer Protocol, the Web IP Internet Protocol LXC Linux Containers NAS Network-Attached Storage PIA Private Internet Access brand of VPN Plex Brand of media server package RAID Redundant Array of Independent Disks for mass storage SMTP Simple Mail Transfer Protocol SSD Solid State Drive mass storage SSH Secure Shell for remote terminal access SSL Secure Sockets Layer, for transparent encryption VPN Virtual Private Network VPS Virtual Private Server (opposed to shared hosting) k8s Kubernetes container management package nginx Popular HTTP server

15 acronyms in this thread; the most compressed thread commented on today has 10 acronyms.

[Thread #349 for this sub, first seen 13th Dec 2023, 17:15] [FAQ] [Full list] [Contact] [Source code]

It’s basically a vm without the drawbacks of a vm, why would you not? It’s hecking awesome

I would never go back installing something without docker. Never.

For a lot of smaller things I feel that Docker is overkill, or simply not feasible (package management, utilities like screenfetch, text editors, etc…) but for larger apps it definitely makes it easier once you wrap your head around containerization.

For example, I switched full-time to Jellyfin from Plex and was attempting to use caddy-docker-proxy to forward the host network that Jellyfin uses to the Caddy server, but I couldn’t get it to work automatically (explicitly defining the reverse proxy in the Caddyfile works without issue). I thought it would be easier to just install it natively, but since I hadn’t installed it that way in a few years I forgot that it pulls in like 30-40 dependencies since it’s written in .Net (or would that be C#?) and took a good few minutes to install. I said screw that and removed all the deps and went back to using the container and just stuck with the normal version of Caddy which works fine.

I think it’s a good tool to have on your toolbelt, so it can’t hurt to look into it.

Whether you will like it or not, and whether you should move your existing stuff to it is another matter. I know us old Unix folk can be a fussy bunch about new fads (I started as a Unix admin in the late 90s myself).

Personally, I find docker a useful tool for a lot of things, but I also know when to leave the tool in the box.