I think it’s really disingenuous to mention the DeviantArt/Midjourney/Runway AI/Stability AI lawsuit without talking about how most of the infringement claims were dismissed by the judge.

I think it’s really disingenuous to mention the DeviantArt/Midjourney/Runway AI/Stability AI lawsuit without talking about how most of the infringement claims were dismissed by the judge.

This isn’t about research into AI, what some people want will impact all research, criticism, analysis, archiving. Please re-read the letter.

Damn, this article is so biased.

You should read this letter by Katherine Klosek, the director of information policy and federal relations at the Association of Research Libraries.

Why are scholars and librarians so invested in protecting the precedent that training AI LLMs on copyright-protected works is a transformative fair use? Rachael G. Samberg, Timothy Vollmer, and Samantha Teremi (of UC Berkeley Library) recently wrote that maintaining the continued treatment of training AI models as fair use is “essential to protecting research,” including non-generative, nonprofit educational research methodologies like text and data mining (TDM). If fair use rights were overridden and licenses restricted researchers to training AI on public domain works, scholars would be limited in the scope of inquiries that can be made using AI tools. Works in the public domain are not representative of the full scope of culture, and training AI on public domain works would omit studies of contemporary history, culture, and society from the scholarly record, as Authors Alliance and LCA described in a recent petition to the US Copyright Office. Hampering researchers’ ability to interrogate modern in-copyright materials through a licensing regime would mean that research is less relevant and useful to the concerns of the day.

It was a different word when this show aired. https://youtu.be/rMoDslz0EtI

One can imagine Grape-kun died happy.

Yeah, I really don’t get why this is news I have to keep hearing about.

That title looks like a typical sports anime title.

I could go on, but I think I’ve made my point.

Have you read this article by Cory Doctorow yet?

He blames Monster Hunter being available on PC for cheating, but Monster Hunter has always had tools and cheats. An absolute trash take.

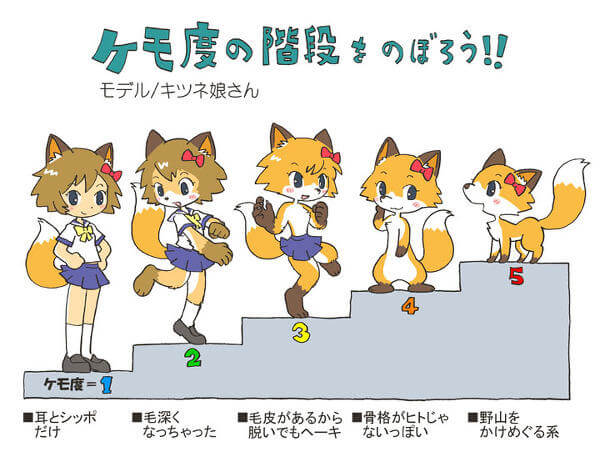

She can’t be fully 2 without the paws and face though.

But without the face she isn’t quite there yet.

I’d say you’re mostly safe since we’re not even reaching step two.

You should read One Piece.

It should be fully legal because it’s still a person doing it. Like Cory Doctrow said in this article:

Break down the steps of training a model and it quickly becomes apparent why it’s technically wrong to call this a copyright infringement. First, the act of making transient copies of works – even billions of works – is unequivocally fair use. Unless you think search engines and the Internet Archive shouldn’t exist, then you should support scraping at scale: https://pluralistic.net/2023/09/17/how-to-think-about-scraping/

Making quantitative observations about works is a longstanding, respected and important tool for criticism, analysis, archiving and new acts of creation. Measuring the steady contraction of the vocabulary in successive Agatha Christie novels turns out to offer a fascinating window into her dementia: https://www.theguardian.com/books/2009/apr/03/agatha-christie-alzheimers-research

The final step in training a model is publishing the conclusions of the quantitative analysis of the temporarily copied documents as software code. Code itself is a form of expressive speech – and that expressivity is key to the fight for privacy, because the fact that code is speech limits how governments can censor software: https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech/

That’s all these models are, someone’s analysis of the training data in relation to each other, not the data itself. I feel like this is where most people get tripped up. Understanding how these things work makes it all obvious.

Watching that made me sick.

I didn’t expect to see a Megaman Battle Network reference here.

It sounds a lot like this quote from Andrej Karpathy :

Turns out that LLMs learn a lot better and faster from educational content as well. This is partly because the average Common Crawl article (internet pages) is not of very high value and distracts the training, packing in too much irrelevant information. The average webpage on the internet is so random and terrible it’s not even clear how prior LLMs learn anything at all.

You should read these two articles from Cory Doctorow. I think they’ll help clear up some thing for you.

https://pluralistic.net/2024/05/13/spooky-action-at-a-close-up/#invisible-hand

https://pluralistic.net/2023/02/09/ai-monkeys-paw/