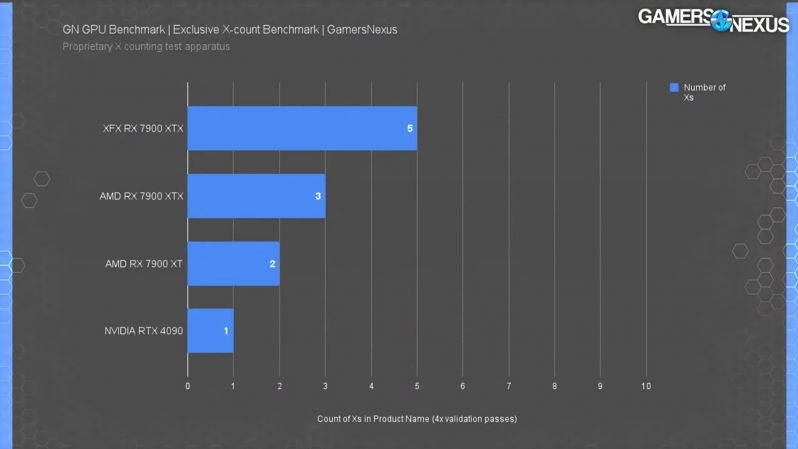

RX 7900 XTX is better because it has more x

The best thing about this is that it’s also on the x-axis.

And they validated this data 4 times. It’s really good data.

Actually, they only validated up to 4xs in the product name, so take the 5x result with a grain of salt, one of the Xs aren’t validated.

“Thanks Steve.”

X is deprecated. Use the RWayland 7900 WaylandTWayland instead.

That’s 50% more Xs ! You can beat this deal !

This bad boy can fit so many X’s in it

Out of all the marketing letters (E, G, I, R, T and X) X is definitely the betterest!

How many X are in XTX?

But what about the xXx_RX_7900_xXx?

xXx_RX_790042069_xXx

But X is bad, as proved by Elon Musk - so it should be the other way around.

That X is twice as much vram, which funny enough, is great for running ai models

My question is, is X better than XTX? XTX has more Xs, but X has only Xs. I think I need AI to solve this quandary.

There’s nothing contradictory in what is written there.

“The XTX is better - but you don’t deserve it, bitch”

Go with the XT, because I’m an evil AI and want all the XTXs for myself muahahaha

This is not wrong

“I get headaches when I run on Nvidia hardware. Now, AMD, running on those things are like swimming in a river of fine chocolate.”

deleted by creator

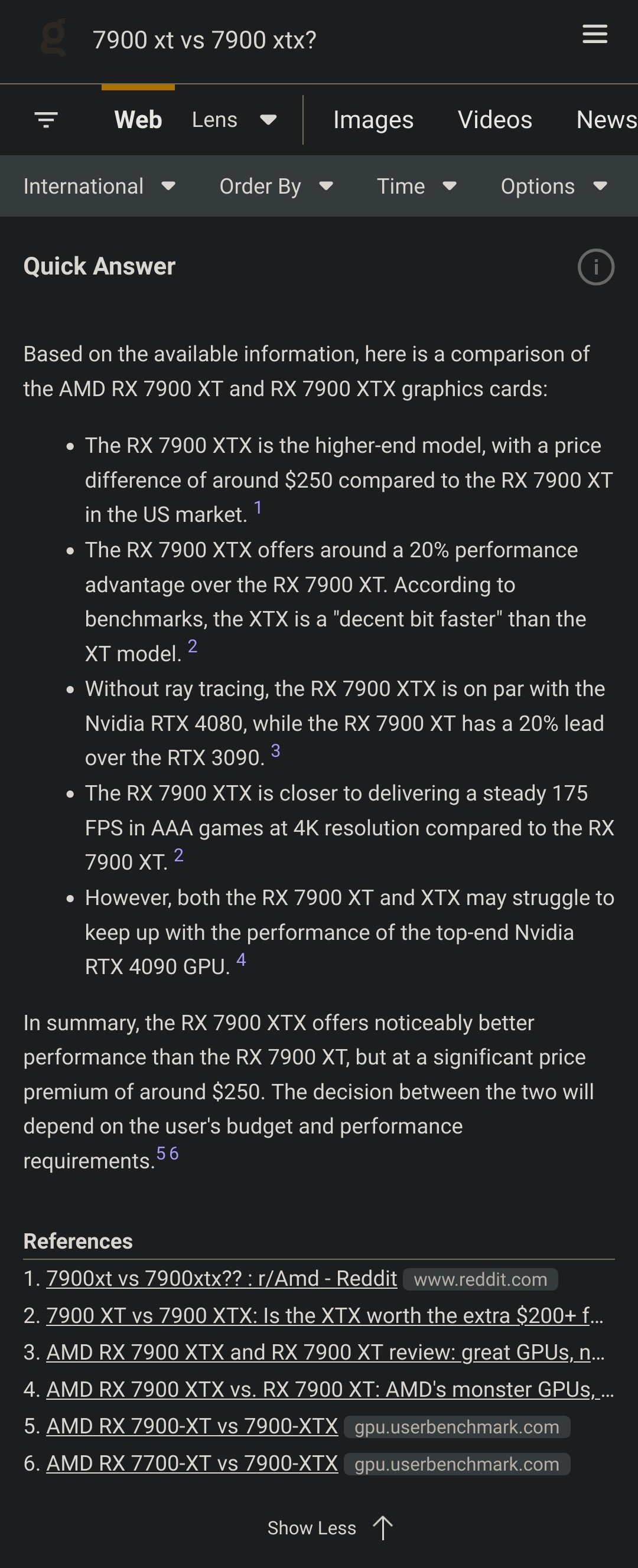

I switched to duckduckgo before this bullshit, but this would 100% make me switch if I hadn’t already.

Who wants random ai gibberish to be the first thing they see?

If search engines don’t improve to address the AI problem, most of the Internet will be AI gibberish.

I think that ship has sailed

The internet as we knew it is doomed to be full of ai garbage. It’s a signal to noise ratio issue. It’s also part of the reason the fediverse and smaller moderated interconnected communities are so important: it keeps users more honest by making moderators more common and, if you want to, you can strictly moderate against AI generated content.

The good thing about that is that this kills the LLMs, since new models can only be trained on this LLM generated gibberish, which makes the gibberish they’ll generate even more garbled and useless, and so on, until every model you try to train can only produce random useless unintelligible garbage.

DuckDuckGo started showing AI results for me.

I think it uses the bing engine iirc.

Sure, but it’s trivial to turn it off. While you’re there, also turn off ads.

And you can use multiple models, which I find handy.

There is some stuff that AI, or rather LLM search, is useful for, at least the time being.

Sometimes you need some information that would require clicking through a lot of sources just to find one that has what you need. With DDG, I can ask the question to their four models*, using four different Firefox containers, copy and paste.

See how their answers align, and then identify keywords from their responses that help me craft a precise search query to identify the obscure primary source I need.

This is especially useful when you don’t know the subject that you’re searching about very well.

*ChatGPT, Claude, Llama, and Mixtral are the available models. Relatively recent versions, but you’ll have to check for yourself which ones.

Better than an Ad I guess? Not sure if my searches haven’t returned any AI stuff like this or if my brain is already ignoring them like ads.

The plan is to monetize the AI results with ads.

I’m not even sure how that works, but I don’t like it.

Sounds like the advice you’d get in the first three comments asking a question on Reddit.

I wonder where they trained the AI model to answer such a question lol.

Don’t forget the glue on the pizza

A.I. or Assumed Intelligence

That’s pretty much it. It just assumes what the next word should be.

deleted by creator

Its not artificial intelligence, its artificial idiocy

Nah. It’s real idiocy.

It’s all probability, what’s the most probable idiocy someone would answer?

Stochastic parrot

No, they’re All Interns.

Here is what kagi delivers with the same prompt:

NB: quick answer is only generated when ending your search with a question mark

its not completely useless… i only asked copilot one thing and it worked; ‘how do i remove or completely disable copilot’

Well, I’m sated.

7900 XTX; more powerful, therefore better.

7900 XT; cheaper, therefore better.

It goes without saying that this shit doesn’t really understand what’s outputting; it’s picking words together and parsing a grammatically coherent whole, with barely any regard to semantics (meaning).

It should not be trying to provide you info directly, it should be showing you where to find it. For example, linking this or this*.

To add injury in this case it isn’t even providing you info, it’s bossing you around. Typical Microsoft “don’t inform a user, tell it [yes, “it”] what it should be doing” mindset. Specially bad in this case because cost vs. benefit varies a fair bit depending on where you are, often there’s no single “right” answer.

*OP, check those two links, they might be useful for you.

LLMs don’t “understand” anything, and it’s unfortunate that we’ve taken to using language related to human thinking to talk about software. It’s all data processing and models.

Yup, 100% this. And there’s a crowd of muppets arguing “ackshyually wut u’re definishun of unrurrstandin/intellijanse?” or “but hyumans do…”, but come on - that’s bullshit, and more often than not sealioning.

Don’t get me wrong - model-based data processing is still useful in quite a few situations. But they’re only a fraction of what big tech pretends that LLMs are useful for.

Yeah, I’m far from anti-AI, but we’re just not anywhere close to where people think we are with it. And I’m pretty sick of corporate leadership saying “We need to make more use of AI” without knowing the difference between an LLM and a machine learning application, or having any idea *how" their company could make use of one of the technologies.

It really feels like one of those hammer in search of a nail things.

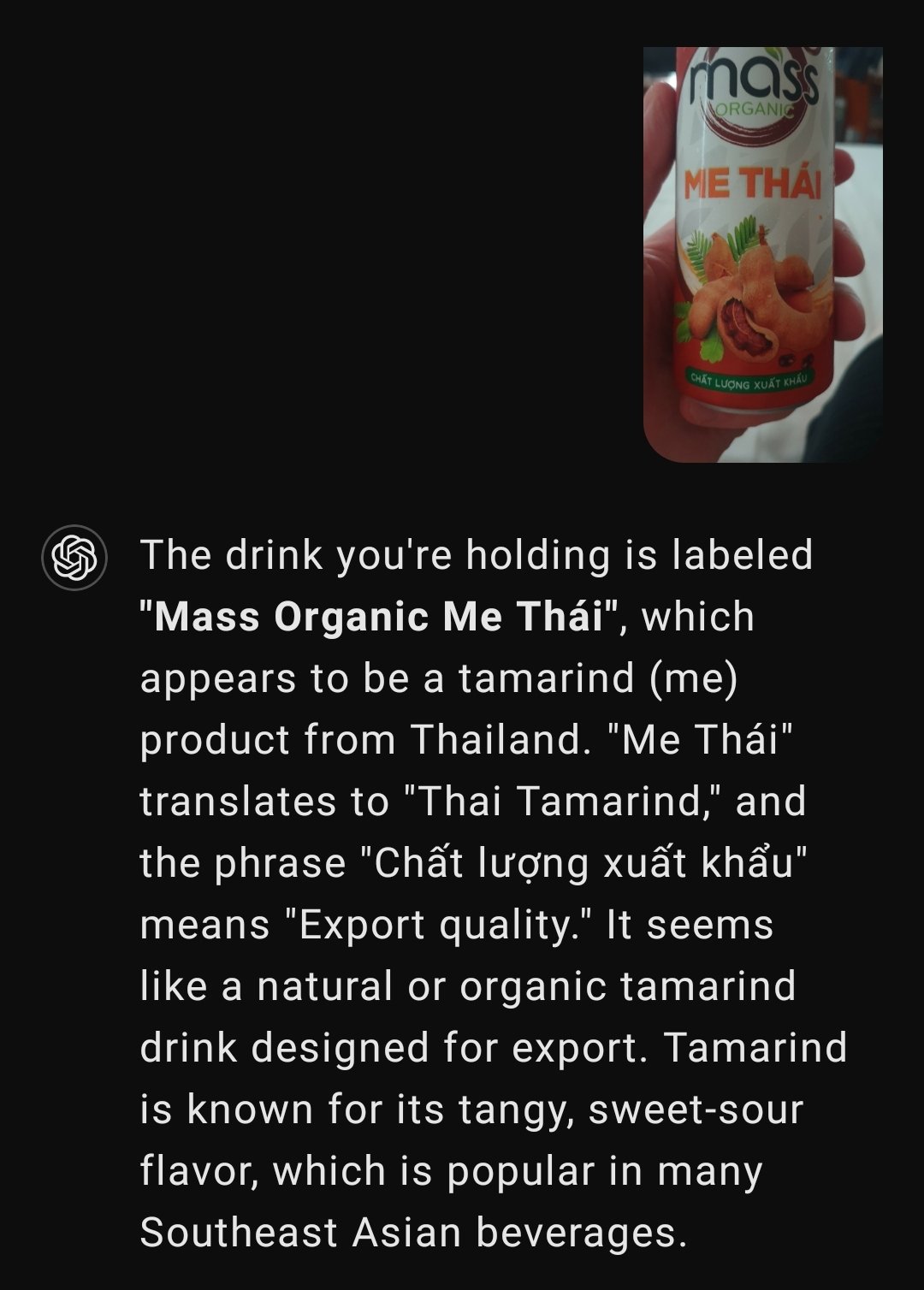

ChatGPT4o can do some impressive and useful things. Here, Im just sending it a mediocre photo of a product with no other context, I didnt type a question. First, its identifying the subject, a drink can. Then its identifying the language used. Then its assuming I want to know about the product so its translating the text without being asked, because it knows I only read english. Then its providing background and also explaining what tamarind is and how it tastes. This is enough for me to make a fully informed decision. Google translate would require me to type the text in, and then would only translate without giving other useful info.

It was delicious.

Google: ok so the AI in search results is a good thing, got it!

The search engine LLMs suck. I’m guessing they use very small models to save compute. ChatGPT 4o and Claude 3.5 are much better.

And good luck typing that in if you don’t know the alphabet it’s written in and can’t copy/paste it.

At the very least it failed in a way that’s obvious by giving you contradictory statements. If it left you with only the wrong statements, that’s when “AI” becomes really insidiuos.

I checked it, it’s true. Side note: it’s “the saté of AI.” FTFY. From what I’ve heard it’s even better than 🍿to sit back and watch this farce unfold.

SearXNG only returns results search engines agree on. That removes ads and this bullshit